Note

Click here to download the full example code

The Estimator class

An introduction to the Estimator class.

The Estimator class is the base for all meta-analyses in NiMARE. A general rule of thumb for Estimators is that they ingest Datasets and output MetaResult objects.

Start with the necessary imports

import os

Load Dataset

from nimare.dataset import Dataset

from nimare.utils import get_resource_path

dset_file = os.path.join(get_resource_path(), "nidm_pain_dset.json")

dset = Dataset(dset_file)

# We will reduce the Dataset to the first 10 studies

dset = dset.slice(dset.ids[:10])

The Estimator

Coordinate-based Estimators allow you to provide a specific KernelTransformer

Each CBMA Estimator’s default KernelTransformer will always be the most appropriate type for that algorithm, but you can swap out the kernel as you wish.

For example, an ALE Estimator could be initialized with an MKDAKernel, though there is no guarantee that the results would make sense.

from nimare.meta.kernel import MKDAKernel

meta = ALE(kernel_transformer=MKDAKernel)

results = meta.fit(dset)

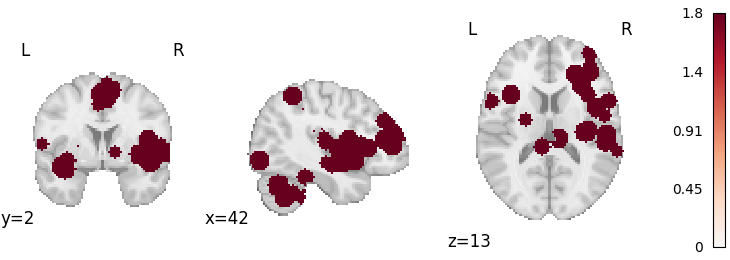

from nilearn.plotting import plot_stat_map

plot_stat_map(results.get_map("z"), draw_cross=False, cmap="RdBu_r")

Out:

<nilearn.plotting.displays._slicers.OrthoSlicer object at 0x7fe0a3ec7bd0>

CBMA Estimators can accept KernelTransformers a few different ways

from nimare.meta.kernel import ALEKernel

Initializing the Estimator with a KernelTransformer class alone will use its default settings.

Out:

ALEKernel()

You can also initialize the Estimator with an initialized KernelTransformer object.

Out:

ALEKernel()

This is especially useful if you want to initialize the KernelTransformer with parameters with non-default values.

Out:

ALEKernel(sample_size=20)

You can also provide specific initialization values to the KernelTransformer

via the Estimator, by including keyword arguments starting with kernel__.

meta = ALE(kernel__sample_size=20)

print(meta.kernel_transformer)

Out:

ALEKernel(sample_size=20)

Most CBMA Estimators have multiple ways to test uncorrected statistical significance

For most Estimators, the two options, defined with the null_method

parameter, are "approximate" and "montecarlo".

For more information about these options, see Null methods.

Note that, to measure significance appropriately with the montecarlo method, you need a lot more than 10 iterations. We recommend 10000 (the default value).

mc_meta = ALE(null_method="montecarlo", n_iters=10, n_cores=1)

mc_results = mc_meta.fit(dset)

Out:

0%| | 0/10 [00:00<?, ?it/s]

10%|# | 1/10 [00:00<00:02, 3.79it/s]

20%|## | 2/10 [00:00<00:02, 3.78it/s]

30%|### | 3/10 [00:00<00:01, 3.82it/s]

40%|#### | 4/10 [00:01<00:01, 3.80it/s]

50%|##### | 5/10 [00:01<00:01, 3.82it/s]

60%|###### | 6/10 [00:01<00:01, 3.83it/s]

70%|####### | 7/10 [00:01<00:00, 3.82it/s]

80%|######## | 8/10 [00:02<00:00, 3.82it/s]

90%|######### | 9/10 [00:02<00:00, 3.82it/s]

100%|##########| 10/10 [00:02<00:00, 3.80it/s]

100%|##########| 10/10 [00:02<00:00, 3.81it/s]

The null distributions are stored within the Estimators

from pprint import pprint

pprint(meta.null_distributions_)

Out:

{'histogram_bins': array([0.000e+00, 1.000e-05, 2.000e-05, ..., 7.247e-02, 7.248e-02,

7.249e-02]),

'histweights_corr-none_method-approximate': array([5.17138782e-01, 1.68074450e-21, 5.51924442e-02, ...,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00])}

As well as the MetaResult, which stores a copy of the Estimator

Out:

{'histogram_bins': array([0.000e+00, 1.000e-05, 2.000e-05, ..., 7.247e-02, 7.248e-02,

7.249e-02]),

'histweights_corr-none_method-approximate': array([5.17138782e-01, 1.68074450e-21, 5.51924442e-02, ...,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00])}

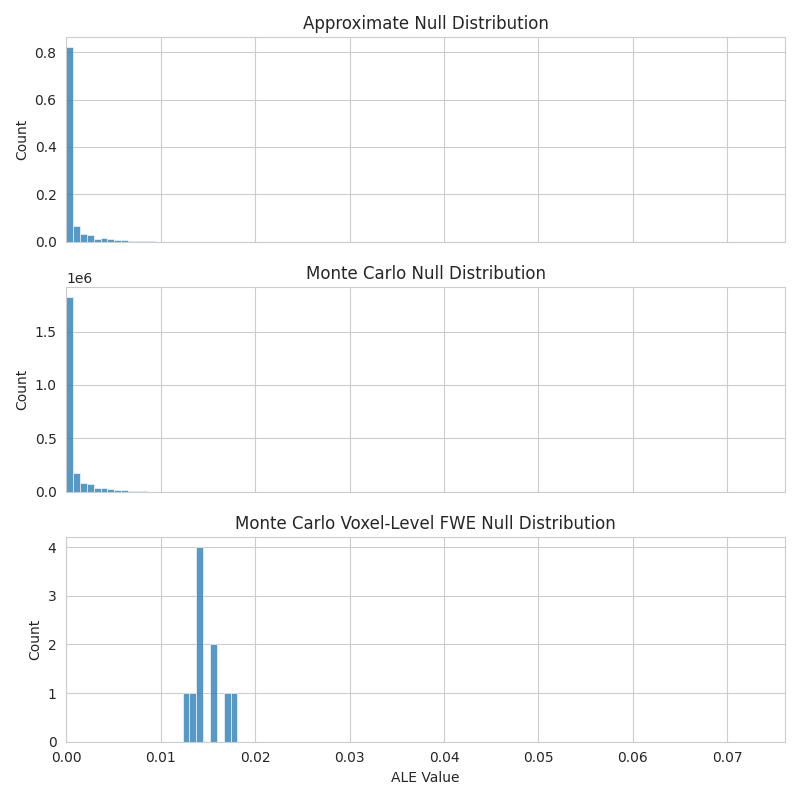

The null distributions also differ based on the null method.

For example, the "montecarlo" option creates a

histweights_corr-none_method-montecarlo distribution, instead of the

histweights_corr-none_method-approximate produced by the

"approximate" method.

Out:

{'histogram_bins': array([0.000e+00, 1.000e-05, 2.000e-05, ..., 7.247e-02, 7.248e-02,

7.249e-02]),

'histweights_corr-none_method-montecarlo': array([873492, 121852, 83620, ..., 0, 0, 0]),

'histweights_level-voxel_corr-fwe_method-montecarlo': array([0, 0, 0, ..., 0, 0, 0])}

import matplotlib.pyplot as plt

import seaborn as sns

with sns.axes_style("whitegrid"):

fig, axes = plt.subplots(figsize=(8, 8), sharex=True, nrows=3)

sns.histplot(

x=meta.null_distributions_["histogram_bins"],

weights=meta.null_distributions_["histweights_corr-none_method-approximate"],

bins=100,

ax=axes[0],

)

axes[0].set_xlim(0, None)

axes[0].set_title("Approximate Null Distribution")

sns.histplot(

x=mc_meta.null_distributions_["histogram_bins"],

weights=mc_meta.null_distributions_["histweights_corr-none_method-montecarlo"],

bins=100,

ax=axes[1],

)

axes[1].set_title("Monte Carlo Null Distribution")

sns.histplot(

x=mc_meta.null_distributions_["histogram_bins"],

weights=mc_meta.null_distributions_["histweights_level-voxel_corr-fwe_method-montecarlo"],

bins=100,

ax=axes[2],

)

axes[2].set_title("Monte Carlo Voxel-Level FWE Null Distribution")

axes[2].set_xlabel("ALE Value")

fig.tight_layout()

Total running time of the script: ( 0 minutes 6.395 seconds)